I’m a Ph.D. student in Computer Science at Virginia Tech, advised by Tu Vu. I received my Master’s in Language Science and Technology from Saarland University, where I worked on efficient transfer learning and low-resource NLP with Dietrich Klakow and Vera Demberg. Earlier, I contributed to NLP research on historical archives at Academia Sinica, and I was selected as a Google CSRMP Fellow in 2023.

My research focuses on developing efficient, modular LLMs for multilingual, multitask, and multimodal deployments. I approach this through the lens of collaborative and communal machine learning. Specifically, I work on:

- Efficient model development: Developing methods to make alignment updates faster, cheaper, and more reusable, enabling continual adaptation across evolving model architectures.

- Parameter-efficient transfer learning: Modularizing task and document knowledge into reusable components for scalable transfer.

- Advanced reasoning: Building reasoning-capable multi-LLM systems and modular agents for complex, multilingual, and multimodal tasks.

- Instruction tuning: Enhancing LLM instruction-following capabilities to improve reasoning accuracy and factual responses.

- Data-centric methods: Designing data selection strategies to improve performance and efficiency in low-resource scenarios.

Seeking a 2025 internship position. My CV is available here.

🔥 News

- 2024.10: One paper accepted to EMNLP 2024 Industry Track.

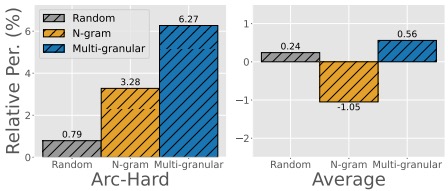

- 2024.09: Paper Target-Aware Language Modeling via Granular Data Sampling accepted to EMNLP 2024.

- 2024.08: Started my Ph.D. at Virginia Tech.

- 2024.07: Paper Exploring the Effectiveness and Consistency of Task Selection in Intermediate-Task Transfer Learning accepted to ACL 2024 SRW.

- 2024.03: Visiting Taipei during March–April — feel free to reach out if you’re there!

- 2024.02: Successfully defended my Master’s thesis Exploring Task Selection for Intermediate-Task Transfer Learning.

- 2024.02: Paper Modeling Orthographic Variation Improves NLP Performance for Nigerian Pidgin accepted to LREC-COLING 2024.

- 2024.01: Paper Projecting Annotations for Discourse Relations accepted to CODI @ EACL 2024.

📝 Selected Publications

Please see Google Scholar for an up-to-date publication list.

* indicates equal contributions

Ernie Chang, Pin-Jie Lin, Yang Li, Changsheng Zhao, Daeil Kim, Rastislav Rabatin, Zechun Liu, Yangyang Shi, Vikas Chandra

EMNLP 2024

[Paper]

Using ~1% of RefinedWeb data to match full pretraining performance.

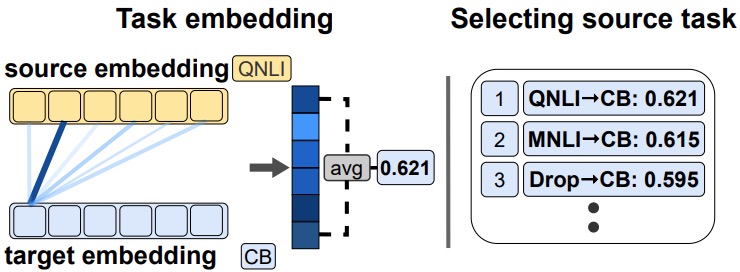

Pin-Jie Lin, Miaoran Zhang, Marius Mosbach, Dietrich Klakow

Student Research Workshop at ACL 2024

[Paper] [Code]

Achieving robust results in modular selection via point-wise similarity.

Pin-Jie Lin, Merel Scholman, Muhammed Saeed, Vera Demberg

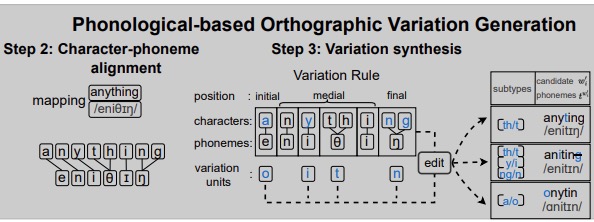

LREC-COLING 2024

[Paper]

Generating synthetic data from a phonological-theoretic, parameter-free framework.

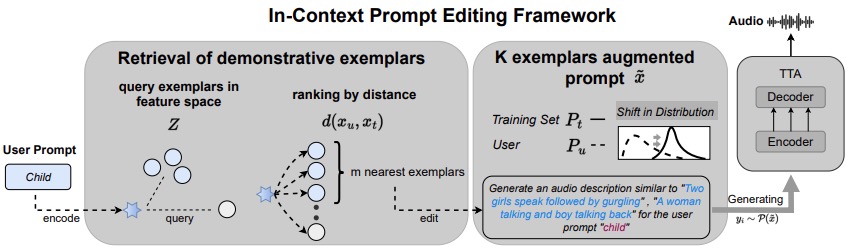

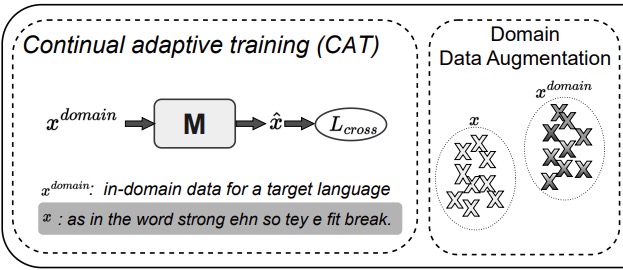

Ernie Chang*, Pin-Jie Lin*, Yang Li, Sidd Srinivasan, Gael Le Lan, David Kant, Yangyang Shi, Forrest Iandola, Vikas Chandra

ICASSP 2024

[Paper]

Featured in HuggingFace Daily Paper and selected by Jordi Pons.

Pin-Jie Lin*, Muhammed Saeed*, Ernie Chang*, Merel Scholman

Interspeech 2023

[Paper]

Improving low-resource performance through mixed-language adaptation.

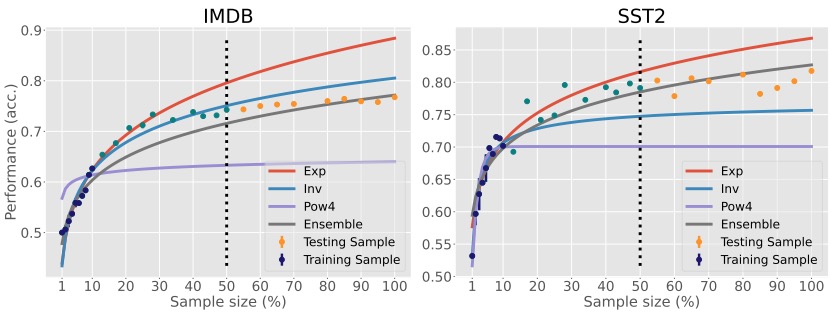

Ernie Chang*, Muhammad Hassan Rashid*, Pin-Jie Lin*, Changsheng Zhao, Vera Demberg, Yangyang Shi, Vikas Chandra

ACL 2023 Findings

[Paper] [Code]

Revisiting sample size estimation to improve data efficiency in NLU tasks.

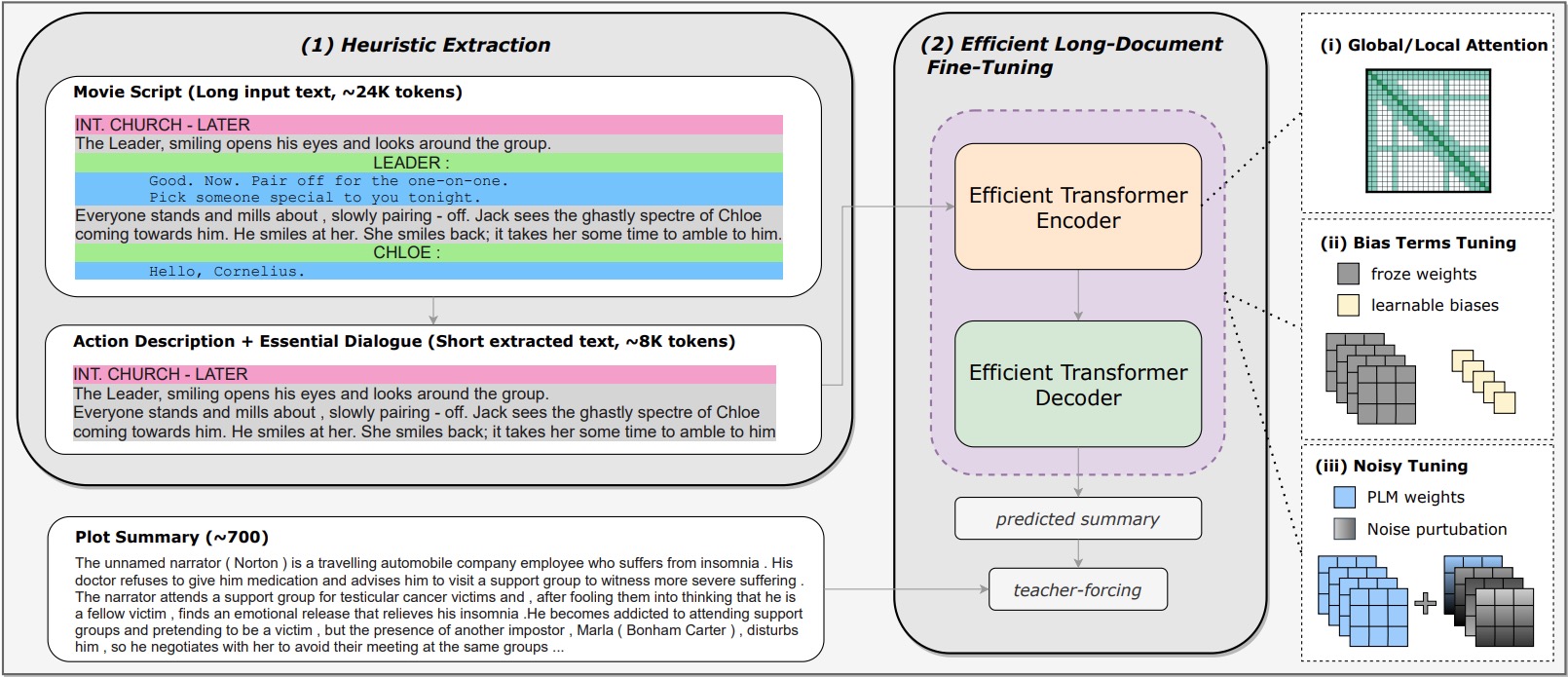

Dongqi Pu*, Xudong Hong*, Pin-Jie Lin*, Ernie Chang, Vera Demberg

COLING 2022

[Paper]

Achieving top performance in movie script summarization.